The Quest for Humane AI: Building Technology with Humanity in Mind

Artificial intelligence (AI) is rapidly transforming our world, with applications impacting everything from healthcare to transportation and entertainment. As AI evolves, so do the ethical considerations surrounding its development and use. One of the most pressing questions is this: can we create AI that is not just powerful, but also humane?

This article dives into the concept of humane AI, exploring its principles, potential benefits, and the challenges we face in achieving it. We will also discuss current efforts in developing AI with a human-centric approach.

Defining Humane AI: What Does it Mean?

Humane AI is a nascent concept that strives to ensure AI is developed and used in a way that respects human values and promotes human well-being. It builds upon established ethical principles like fairness, accountability, transparency, and beneficence (doing good). Here’s a breakdown of some key aspects:

- Alignment with Human Values: Humane AI prioritizes principles like human dignity, privacy, and safety. It avoids perpetuating biases present in training data or algorithms.

- Human-Centered Design: Humane AI development involves considering the human experience, ensuring AI systems are understandable, explainable, and ultimately serve human needs.

- Beneficial Impact: This encompasses applications that improve quality of life, address social challenges, and enhance human capabilities – like AI systems aiding disease diagnosis or scientific discovery.

- Transparency and Explainability: Humans should be able to understand how AI decisions are made and who is responsible for them. This fosters trust and allows for course correction if needed.

- Accountability and Control: Humans should have control over AI systems and hold developers accountable for their actions. This includes safeguards against misuse and unintended consequences.

- Respect for Autonomy: Human autonomy and freedom of choice should be respected by AI systems. This ensures humans remain in control and AI remains a tool, not a dictator.

Why is Humane AI Important?

Developing AI with a human-centric approach is crucial for several reasons:

- Reduced Risk of Bias: Training data can reflect societal biases. Humane AI aims to mitigate this, ensuring AI systems do not discriminate based on race, gender, or other factors.

- Preservation of Privacy: AI interactions can generate vast amounts of personal data. Humane AI emphasizes data privacy and security, minimizing risks to user information.

- Promoting Human Flourishing: By focusing on applications that enhance human capabilities and address societal challenges, AI can contribute to improved well-being.

- Maintaining Trust and Control: A transparent and accountable AI development process fosters trust between users and developers. Humans need to feel in control of AI, not the other way around.

- Mitigating Existential Risk: Some experts believe advanced AI could pose an existential threat if not developed responsibly. Humane AI aims to ensure AI remains a tool for good.

Challenges on the Road to Humane AI

Building AI with a human touch presents significant challenges:

- Defining Human Values: Universal human values may not exist. There can be cultural and ethical differences in what constitutes “humane.”

- Measuring Humanity: How do we quantify whether an AI exhibits humane characteristics? The current state of AI research may not have the tools for such an assessment.

- The Problem of Bias: Bias can creep in at various stages of AI development, from data collection to training algorithms. Mitigating ingrained biases requires robust safeguards.

- Transparency vs. Explainability: Highly complex AI models may be difficult to explain in a way humans can understand. Balancing transparency with the actual capabilities of the technology is a challenge.

- Addressing Unintended Consequences: The full impact of complex AI systems can be unforeseen. Robust testing, ethical guidelines, and ongoing human oversight are crucial.

Guiding Frameworks and Principles

Various frameworks and principles can guide the development of humane AI. Some key examples include:

- The Asilomar AI Principles: Developed in 2017 by leading AI researchers, these principles emphasize beneficial use, safety, accountability, transparency, fairness, and human control. https://futureoflife.org/open-letter/ai-principles/

- The Ethics Guidelines for Trustworthy AI by the European Commission outlines seven key requirements for trustworthy AI, including human agency and oversight, technical robustness and safety, privacy and data governance, fairness and non-discrimination, accountability, transparency, and well-being and sustainability.

- The Montreal Declaration for Responsible AI Development calls for the development and use of AI that respects human rights, democracy, and social justice principles. https://recherche.umontreal.ca/english/strategic-initiatives/montreal-declaration-for-a-responsible-ai/

These frameworks serve as valuable starting points for ethical AI development, but continuous discussion and revision are needed as the field progresses.

Current Efforts in Building Humane AI

Despite the challenges, researchers, developers, and policymakers are actively working towards building humane AI. Here are some ongoing efforts:

1. Fairness in AI Research:

- Fairness-aware machine learning algorithms are being developed to mitigate bias in datasets and model training. Techniques like debiasing algorithms and counterfactual explanations are being explored.

- Research institutions like the MIT Open Policy Initiative https://d-lab.mit.edu/research/mit-d-lab-cite/fairness-bias-and-appropriate-use-machine-learning and the Algorithmic Justice League [https://ajlunited.org/] are dedicated to studying fairness issues in AI and advocating for equitable algorithms.

2. Explainable AI (XAI):

- Researchers are developing tools and techniques to make AI decision-making processes more transparent and understandable. This includes post-hoc explanations and visualizations that allow humans to grasp how AI arrives at conclusions.

- DARPA (Defense Advanced Research Projects Agency) launched the Explainable AI (XAI) program to develop methods for explaining the inner workings of complex AI models.

3. Human-AI Collaboration:

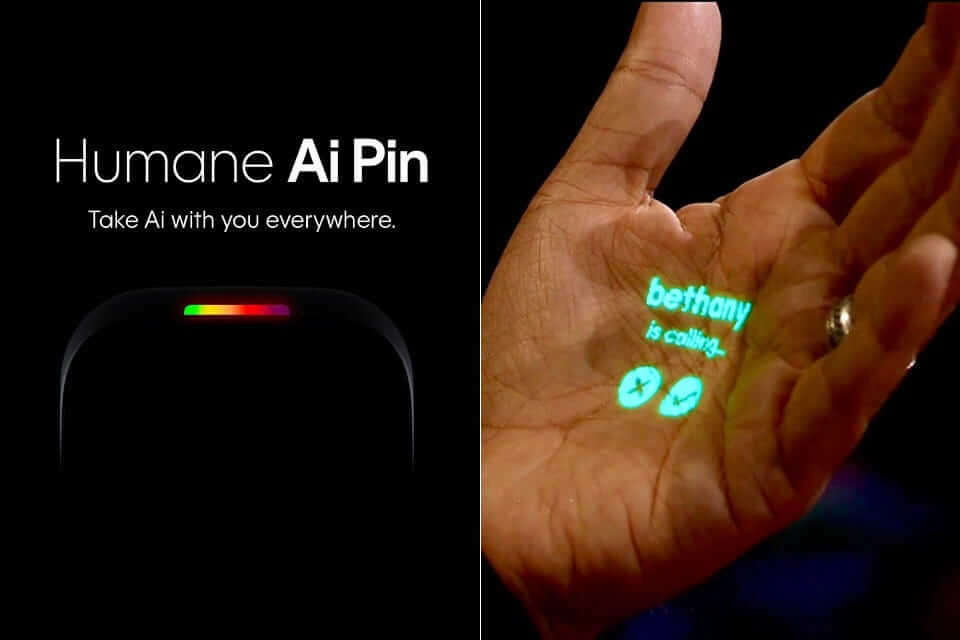

- A growing focus on human-centered design ensures AI systems complement and augment human capabilities. This involves user-friendly interfaces and clear communication between humans and AI.

- Projects are exploring collaborative AI systems where humans and AI work together on tasks, leveraging each other’s strengths.

4. Policy and Regulation:

- Governments around the world are developing policies and regulations to guide the development and use of AI. The European Union’s General Data Protection Regulation (GDPR) is an example of legislation aimed at protecting user privacy in the age of AI.

- International organizations like the Organisation for Economic Co-operation and Development (OECD) are developing frameworks for responsible AI development. https://mneguidelines.oecd.org/RBC-and-artificial-intelligence.pdf

5. Public Awareness and Education:

- Public education initiatives are raising awareness about the potential benefits and risks of AI. This fosters informed discussions and helps build trust in AI technology.

- Educational programs are being developed to equip future generations with the skills needed to develop and interact with AI responsibly.

Examples of Human e AI in Action:

Several real-world applications demonstrate the potential of humane AI:

- AI-powered healthcare assistants can analyze medical data and suggest treatment options, but ultimately, human doctors make the final decisions.

- AI-driven education platforms can personalize learning experiences for students, but human teachers remain central to the learning process.

These examples showcase how AI can be used to augment human capabilities while keeping human values and oversight at the forefront.

The Road Ahead: A Future with Humane AI

The pursuit of humane AI is an ongoing journey. As AI technology continues to evolve, so too must our efforts to ensure it benefits humanity. Here are some key areas for ongoing focus:

- Collaboration between Developers, Policymakers, and the Public: Open dialogue and collaboration are crucial for developing and deploying AI in a responsible way.

- Continuous Learning and Improvement: As AI capabilities advance, the ethical frameworks guiding development need to adapt and evolve.

- Investing in Research: Continued research is necessary in areas like fairness, explainability, and human-AI collaboration to build robust and trustworthy AI systems.

Humane AI is not just a technological challenge; it’s a social and ethical one. By fostering international collaboration, open communication, and a focus on human values, we can build AI systems that enhance our lives and create a brighter future for all.